NSFW JS

Client-side indecent content checker powered by TensorFlowJS

NSFW JS is a client-side tool for detecting indecent content in images with high accuracy using TensorFlowJS. It's efficient, fast, and easy to integrate into web applications. Ideal for ensuring content safety on platforms with user-uploaded images.

2024-07-01

16.9K

NSFW JS Product Information

NSFW JS

What's NSFW JS

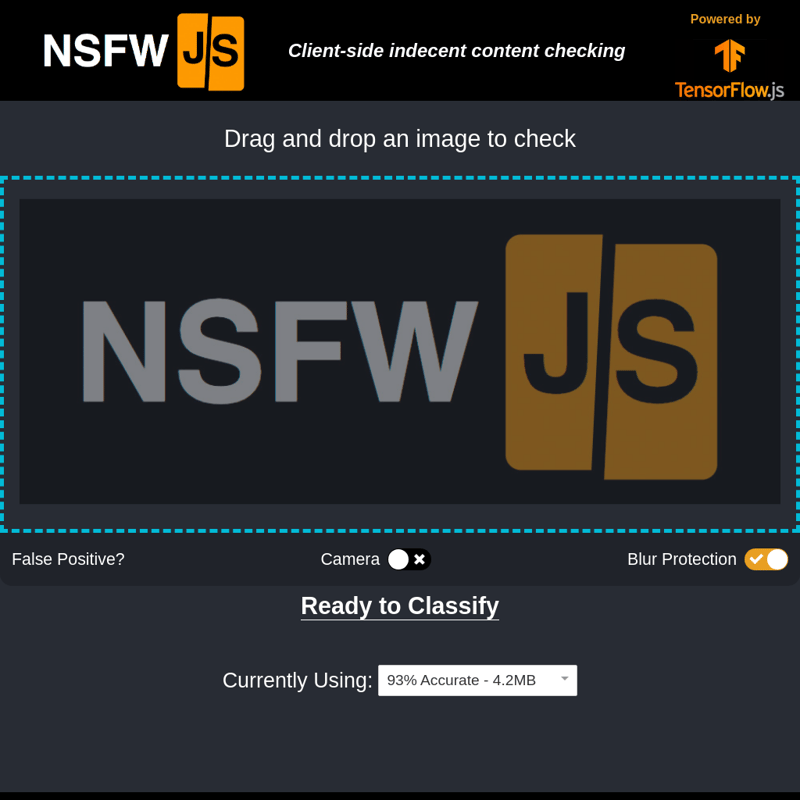

NSFW JS is a client-side tool designed to check for indecent content in images. Powered by TensorFlowJS, it offers a high level of accuracy and efficiency, making it a reliable choice for maintaining content safety on platforms that host user-uploaded images.

Features

- Client-side Processing: Ensures that content checking happens directly on the user's device, enhancing privacy and speed.

- High Accuracy: Currently achieving a 93% accuracy rate with a 4.2MB model.

- TensorFlowJS Integration: Utilizes the robust capabilities of TensorFlowJS for machine learning directly in the browser.

- Ease of Use: Simple to integrate with existing web applications.

- False Positive Handling: Mechanisms in place to manage and reduce false positives.

- Blur Protection: Provides options to blur indecent content automatically.

Use Case

NSFW JS is ideal for any web platform that allows user-uploaded images. It can be used to automatically check and filter out indecent content, ensuring a safe and clean user experience. Examples include social media sites, forums, and image hosting services.

FAQ

Q: How accurate is NSFW JS?

A: NSFW JS has an accuracy rate of 93%, making it a reliable tool for detecting indecent content.

Q: Does the content checking happen on the server or client side?

A: The content checking happens on the client side, ensuring user privacy and faster processing.

Q: Can NSFW JS handle false positives?

A: Yes, NSFW JS includes mechanisms to manage and reduce false positives.

Q: How large is the model used by NSFW JS?

A: The model size is 4.2MB.

Q: What technology powers NSFW JS?

A: NSFW JS is powered by TensorFlowJS, a powerful machine learning library for JavaScript.

How to Use

- Integrate the Library: Add the NSFW JS library to your project.

- Load the Model: Load the NSFW JS model in your application.

- Check Content: Use the library's functions to check images for indecent content.

- Handle Results: Take appropriate actions based on the results, such as blurring indecent images or alerting users.

For more details and examples, visit the NSFW JS GitHub repository.

![[ai] CrawlSpider Internal Link Builder - Plugins & Extensions AI Tool Screenshot](https://aitoolly.com/cdn-cgi/image/format=auto,width=384/https://m.aitoolly.com/media/aitoolly/2024-06-24/c0f32008-2975-4200-a384-c83c95635c2a.png)